For working professionals

For fresh graduates

- Study abroad

More

- Executive Doctor of Business Administration from SSBM

- Doctorate in Business Administration by Edgewood College

- Doctorate of Business Administration (DBA) from ESGCI, Paris

- Doctor of Business Administration From Golden Gate University

- Doctor of Business Administration from Rushford Business School, Switzerland

- Post Graduate Certificate in Data Science & AI (Executive)

- Gen AI Foundations Certificate Program from Microsoft

- Gen AI Mastery Certificate for Data Analysis

- Gen AI Mastery Certificate for Software Development

- Gen AI Mastery Certificate for Managerial Excellence

- Gen AI Mastery Certificate for Content Creation

- Post Graduate Certificate in Product Management from Duke CE

- Human Resource Analytics Course from IIM-K

- Directorship & Board Advisory Certification

- Gen AI Foundations Certificate Program from Microsoft

- CSM® Certification Training

- CSPO® Certification Training

- PMP® Certification Training

- SAFe® 6.0 Product Owner Product Manager (POPM) Certification

- Post Graduate Certificate in Product Management from Duke CE

- Professional Certificate Program in Cloud Computing and DevOps

- Python Programming Course

- Executive Post Graduate Programme in Software Dev. - Full Stack

- AWS Solutions Architect Training

- AWS Cloud Practitioner Essentials

- AWS Technical Essentials

- The U & AI GenAI Certificate Program from Microsoft

1. Introduction

6. PyTorch

9. AI Tutorial

10. Airflow Tutorial

11. Android Studio

12. Android Tutorial

13. Animation CSS

16. Apex Tutorial

17. App Tutorial

18. Appium Tutorial

21. Armstrong Number

22. ASP Full Form

23. AutoCAD Tutorial

27. Belady's Anomaly

30. Bipartite Graph

35. Button CSS

39. Cobol Tutorial

46. CSS Border

47. CSS Colors

48. CSS Flexbox

49. CSS Float

51. CSS Full Form

52. CSS Gradient

53. CSS Margin

54. CSS nth Child

55. CSS Syntax

56. CSS Tables

57. CSS Tricks

58. CSS Variables

61. Dart Tutorial

63. DCL

65. DES Algorithm

83. Dot Net Tutorial

86. ES6 Tutorial

91. Flutter Basics

92. Flutter Tutorial

95. Golang Tutorial

96. Graphql Tutorial

100. Hive Tutorial

103. Install Bootstrap

107. Install SASS

109. IPv 4 address

110. JCL Programming

111. JQ Tutorial

112. JSON Tutorial

113. JSP Tutorial

114. Junit Tutorial

115. Kadanes Algorithm

116. Kafka Tutorial

117. Knapsack Problem

118. Kth Smallest Element

119. Laravel Tutorial

122. Linear Gradient CSS

129. Memory Hierarchy

133. Mockito tutorial

134. Modem vs Router

135. Mulesoft Tutorial

136. Network Devices

138. Next JS Tutorial

139. Nginx Tutorial

141. Octal to Decimal

142. OLAP Operations

143. Opacity CSS

144. OSI Model

145. CSS Overflow

146. Padding in CSS

148. Perl scripting

149. Phases of Compiler

150. Placeholder CSS

153. Powershell Tutorial

158. Pyspark Tutorial

161. Quality of Service

162. R Language Tutorial

164. RabbitMQ Tutorial

165. Redis Tutorial

166. Redux in React

167. Regex Tutorial

170. Routing Protocols

171. Ruby On Rails

172. Ruby tutorial

173. Scala Tutorial

175. Shadow CSS

178. Snowflake Tutorial

179. Socket Programming

180. Solidity Tutorial

181. SonarQube in Java

182. Spark Tutorial

189. TCP 3 Way Handshake

190. TensorFlow Tutorial

191. Threaded Binary Tree

196. Types of Queue

197. TypeScript Tutorial

198. UDP Protocol

202. Verilog Tutorial

204. Void Pointer

205. Vue JS Tutorial

206. Weak Entity Set

207. What is Bandwidth?

208. What is Big Data

209. Checksum

211. What is Ethernet

214. What is ROM?

216. WPF Tutorial

217. Wireshark Tutorial

218. XML Tutorial

Apache Spark Tutorial

Introduction

In today's data-driven world, mastering Apache Spark is essential. The Apache Spark tutorial helps you understand this multi-language engine for processing massive datasets efficiently. Whether you're a beginner or an expert, our structured approach will help you learn Spark's core concepts, architecture, and advanced techniques. With real-world examples and exercises, you'll develop the expertise to handle diverse data tasks.

Overview

Welcome to our Apache Spark tutorial! Explore the world of big data processing with speed and efficiency. This guide covers Spark's fundamentals, architecture, and advanced features. Master RDDs, machine learning, Spark SQL, and more through hands-on examples. Learn to write efficient Spark applications for large-scale data tasks. Join us and excel in the world of big data processing.

What is Spark?

Apache Spark is an open-source, fast, and powerful distributed computing framework designed for processing and analyzing large datasets. It excels at tackling complex data tasks, offering speed, scalability, and versatility to handle a wide range of data processing challenges.

Key Features:

1. Speed: Spark's in-memory processing is up to 100x faster than Hadoop MapReduce for data retrieval and computation.

2. Ease of Use: With APIs in Python, Java, Scala, and SQL, Spark is accessible to a variety of developers. Its user-friendly syntax simplifies code development.

3. Versatility: Spark's libraries cover diverse use cases, from batch processing to interactive querying and machine learning, all on a single platform.

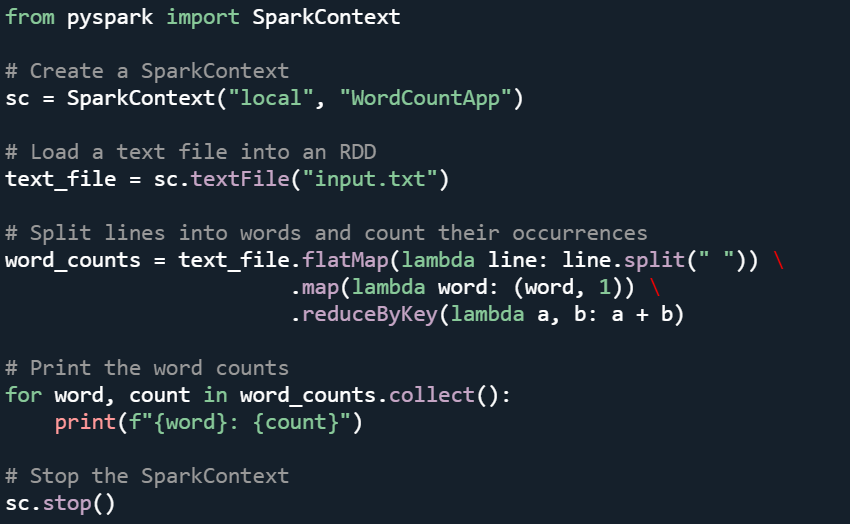

Example: Word Count using Spark:

Consider a scenario where you need to count the occurrences of words in a large text document using Spark:

In this example, Spark's RDD (Resilient Distributed Dataset) is used to load a text file, split lines into words, and then count the occurrences of each word. Spark's parallel processing capability and memory caching enhance the speed and efficiency of the word count operation.

Here's what it should do:

1. Creating a SparkContext with the name "WordCountApp."

2. Loading a text file named "input.txt" into an RDD (Resilient Distributed Dataset).

3. Splitting the lines of the RDD into words, mapping each word to a tuple '(word, 1)', and then reducing by key to count the occurrences of each word.

4. Printing the word count

5. Stopping the SparkContext.

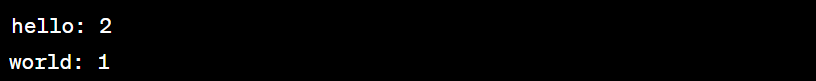

The expected output depends on the content of the "input.txt" file. Assuming the file contains text like "hello world hello" on separate lines, the output should be something like:

This output represents the word count of each word in the file. The word "hello" appears twice, and "world" appears once.

Apache Spark excels in fast and efficient data processing, driving big data analytics, machine learning, graph processing, and more. Its versatile libraries and multi-language support fuel its adoption across data-rich industries.

Apache Spark Architecture

The Apache Spark framework optimizes data processing, has fault tolerance, and supports multiple programming languages. Its key components and concepts include:

1. Driver Node:

The driver node is the entry point for a Spark application. It contains the user's application code and orchestrates the execution of tasks across the cluster.

2. Cluster Manager:

Spark can run on various cluster managers like Apache Mesos, Hadoop YARN, or Kubernetes. The cluster manager is responsible for managing the allocation of resources and coordinating tasks across worker nodes.

3. Worker Nodes:

Worker nodes are the individual machines in the cluster that perform data processing tasks.

4. Executors:

Executors are processes that run on worker nodes and execute tasks assigned by the driver.

5. Resilient Distributed Dataset (RDD):

RDD is the fundamental data structure in Spark. It represents an immutable, distributed collection of data that can be processed in parallel across the cluster.

6. Directed Acyclic Graph (DAG):

The execution plan of Spark operations is represented as a DAG, which defines the sequence of transformations and actions to be performed on RDDs.

7. Stages:

The DAG is divided into stages based on data shuffling operations (like groupByKey or reduceByKey).

8. Shuffling:

Shuffling is the process of redistributing data across partitions. It typically occurs when operations like groupByKey or join are performed.

9. Caching:

Spark allows users to persist intermediate RDDs in memory, improving performance by avoiding costly re-computation.

10. Broadcasting:

Small amounts of data can be efficiently shared across the cluster using broadcasting, which reduces data transfer overhead during operations like join.

Apache Spark Documentation

The Apache Spark documentation offers in-depth guidance on Apache Spark installation, configuration, usage, and component details.

1. Getting Started:

- Installation instructions for various environments (local, cluster, and cloud).

- Quickstart guides for running Spark applications.

Programming Guides:

2. Programming Guides:

- Overview of Spark's programming model.

- Detailed explanations of RDDs, DataFrames, Datasets, and SQL operations.

- API references for Spark's libraries and modules.

3. Structured Streaming:

Information about Spark's structured streaming capabilities for real-time data processing.

4. Machine Learning Library (MLlib):

Guides and examples for using Spark's machine learning library for building and training ML models.

5. Graph Processing (GraphX):

Documentation on Spark's graph processing library for analyzing graph-structured data.

6. SparkR:

Documentation for using Spark with the R programming language.

7. PySpark:

Documentation for using Spark with the Python programming language.

8. Deploying:

Guides for deploying Spark applications on various cluster managers (Mesos, YARN, Kubernetes).

9. Configuration:

Configuration options and settings to fine-tune Spark's behavior.

10. Monitoring and Instrumentation:

Information about monitoring Spark applications and tracking their performance.

11. Spark on Mesos:

Documentation for running Spark on the Apache Mesos cluster manager.

12. Spark on Kubernetes:

Information on deploying Spark applications on Kubernetes clusters.

13. Community:

Links to mailing lists, forums, and other community resources for seeking help and sharing knowledge.

Spark Tutorial Databricks

Databricks simplifies Apache Spark-based data analytics and machine learning on the cloud. It offers a collaborative environment for data professionals and provides tutorials for using Spark within the platform.

Analyzing Sales Data Using Spark in Databricks

Step 1: Access the Databricks Platform:

Access the Databricks platform through your web browser.

Step 2: Create a Notebook:

1. Click on the "Workspace" tab and then the "Create" button to create a new notebook.

2. Select a name for your notebook, the programming language (e.g., Scala, Python), and a cluster to attach the notebook to.

Step 3: Load Data

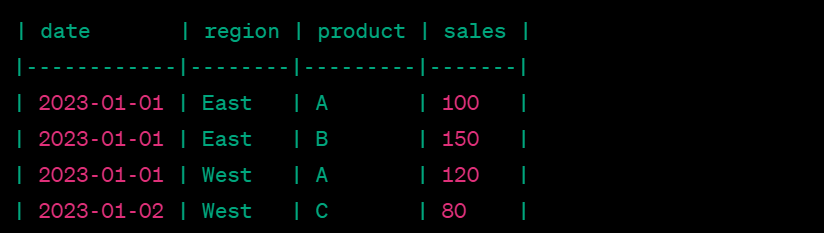

You can load your sales data into Databricks. For example, you have a CSV file named "sales_data.csv" with the following content:

In the notebook, you can use the following code snippet to load a sample sales dataset from a CSV file stored in cloud storage:

# Load data

sales_data = spark.read.csv ("dbfs:/FileStore/tables/sales_data.csv " ,

header=True , inferSchema = True)

Step 4: Perform Transformations:

Perform some basic transformations on the loaded DataFrame, such as filtering, grouping, and aggregation:

from pyspark.sql.functions import sum, avg

# Filter data for a specific region

filtered_sales = sales_data.filter ( sales_data [ " region " ] == " West " )

# Group data by product and calculate total and average sales

product_sales = filtered_sales.groupBy ( " product " ).agg ( sum ( " sales " ).alias ( " total_sales " ), avg ( " sales " ).alias ( " avg_sales " ) )

Step 5: Data Visualization

Databricks allows you to create visualizations directly in the notebook. Create a bar chart to visualize total sales per product:

display(product_sales)

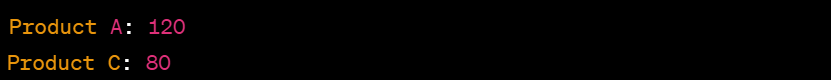

Expected Output:

Upon code execution, Databricks displays a bar chart showing total sales by product for the 'West' region, as illustrated:

Here, Product A has 120 total sales, and Product C has 80 total sales in the 'West' region. Chart appearance may vary in Databricks due to customization.

Step 6: Sharing and Collaboration:

Share your notebook with collaborators by clicking the "Share" button in the notebook interface.

Step 7: Running Jobs and Scheduling (Optional):

You can run your notebook as a job or schedule it to run at specific times.

Step 8: Save and Export:

Make sure to save your notebook as you make changes to it.

Spark SQL Tutorial

Spark SQL is a part of Apache Spark; it lets you work with structured data using SQL and DataFrames. You can seamlessly integrate SQL queries with your Spark apps.

The below tutorial covers Spark SQL basics with examples:

Tutorial: Using Spark SQL for Data Analysis

Step 1: Access Spark Cluster

Ensure you have a running Spark cluster or environment.

Step 2: Create a SparkSession

In a Spark application, create a SparkSession, which serves as the entry point to using Spark SQL and DataFrame APIs:

import org.apache.spark.sql.SparkSession

val spark = SparkSession.builder()

.appName("SparkSQL Tutorial")

.getOrCreate()

Step 3: Load Data

Load a dataset into a DataFrame. For this, let's assume you have a CSV file named "employees.csv":

val employeesDF = spark.read

.option ("header", "true" )

.option ("inferSchema " , "true" )

.csv ("path / to / employees.csv")

Step 4: Register DataFrame as a Table

Register the DataFrame as a temporary table to run SQL queries on it:

employeesDF.createOrReplaceTempView(“employees”)

Step 5: Execute SQL Queries

Conclusion

In conclusion, this Spark tutorial covers the fundamentals and versatility of Apache Spark. You now possess a strong foundation to explore its potential, from large datasets to complex machine learning and real-time analytics. Spark is a vital tool in data processing, offering flexibility and speed for diverse projects in data analytics.

FAQs

Q1. How does Apache Spark compare to Hadoop?

A. Apache Spark processes data in memory, offering faster analytics and iterative processing compared to Hadoop's disk-based approach. Spark's unified framework also supports real-time processing and various libraries.

Q2. Where can I run Apache Spark applications?

A. It can be run on local machines, cluster environments (on-premises or cloud-based), and platforms like Amazon EMR, Google Cloud Dataproc, or Microsoft Azure HDInsight.

Q3. How can I perform real-time data processing with Apache Spark?

A. This streaming processes real-time data by breaking it into micro-batches, enabling you to analyze live streams from various sources like Kafka, Flume, and more.

Q4. Why is Spark's in-memory processing faster?

A. Spark's in-memory processing eliminates the need for frequent disk reads by storing data in memory, leading to faster data access and manipulation for iterative algorithms and interactive tasks.

Q5. What is Spark SQL, and why is it useful?

A. Spark SQL allows querying structured data using SQL syntax. It integrates seamlessly with Spark's DataFrame API, enabling SQL-like queries on structured and semi-structured data.

Q6. How can I leverage Spark's machine-learning capabilities?

A. Spark's MLlib library provides tools for data preprocessing, model selection, and evaluation using various algorithms. You can use MLlib to create and train machine learning models on your data.

Author|900 articles published

upGrad Learner Support

Talk to our experts. We are available 7 days a week, 9 AM to 12 AM (midnight)

Indian Nationals

1800 210 2020

Foreign Nationals

+918068792934

Disclaimer

1.The above statistics depend on various factors and individual results may vary. Past performance is no guarantee of future results.

2.The student assumes full responsibility for all expenses associated with visas, travel, & related costs. upGrad does not provide any a.